A Modern Decision Making Framework for SaaS Product Teams

Tired of gut-feel decisions? Learn a modern decision making framework for SaaS teams to prioritize features, reduce waste, and drive real revenue growth.

A decision making framework is simply a structured way to make choices. Instead of relying on a gut feeling or who shouts the loudest, it gives you a repeatable system for weighing your options based on what actually matters. For product teams, this is a game-changer—it turns a messy pile of opinions into a clear, objective process tied directly to your goals.

Why Gut-Feel Product Decisions Are Costing You Money

Let’s be honest. We’ve all been in that meeting where the product roadmap is shaped more by a persuasive executive than by actual data. This kind of gut-feel approach is common, and while it can feel decisive in the moment, it’s a slow-burning disaster for your budget and your team's morale.

When you let intuition drive, you're essentially gambling with your most valuable resources: time and talent.

The fallout is painfully predictable. Engineering hours vanish into features that users ignore. The team constantly misses deadlines because they're being pulled in a dozen different directions, none of which align with what the business truly needs. Before you know it, you’re left with a disjointed product and a burned-out team that’s always reacting instead of leading.

The True Cost of Unstructured Decisions

This isn't just about being inefficient; it's a massive competitive disadvantage. While your team is busy building pet projects for internal stakeholders, your competition is shipping features that solve real customer problems and make them money. It’s a widespread issue—a McKinsey survey found that 60 percent of executives feel that bad decisions happen just as often as good ones.

Without a system, you’re almost guaranteed to run into these problems:

- Wasted Development Cycles: You burn through engineering resources on features that don’t move the needle on activation, retention, or revenue.

- Organizational Misalignment: Sales wants one thing, marketing wants another, and product is caught in the middle. Everyone is fighting for their own priorities because there’s no shared vision.

- Decreased Team Morale: Nothing kills motivation faster than pouring your heart into a feature only to see it flop. When this happens repeatedly, your best people start to check out.

Introducing a Framework as the Antidote

This is where a decision making framework comes in. It’s the antidote to all that chaos. This isn't about adding red tape or slowing things down with pointless rules. It’s about creating a transparent, repeatable system that helps you cut through the noise and focus on what will deliver real value.

Think of it as a shared language for your entire organization. It creates a single source of truth that aligns every department on what truly matters to your customers and, ultimately, your bottom line.

By setting up a clear method for evaluating ideas, you fundamentally change the conversation. It’s no longer about "who wants this?" but "what impact will this have?" That simple shift empowers your team to make confident, data-backed choices that build momentum and create a product people are actually excited to use.

From Theory to the Tech World: A Quick History

The whole idea of a decision-making framework wasn't cooked up in some Silicon Valley startup's brainstorming session. It actually has deep roots going back to the mid-20th century. That’s when mathematicians and economists started tackling a huge problem: how do you make a truly rational choice when the future is uncertain? Their goal was to pull decision-making out of the realm of gut feelings and turn it into a more objective, mathematical process of weighing risks and rewards.

This was a massive change in perspective. Up until then, big business decisions were mostly based on the experience and intuition of leaders. The real breakthrough came from a few pioneers who created structured models to put a number on abstract ideas like value, risk, and utility.

Turning Economic Theory into Product Backlogs

This early work completely changed how we think about making choices. Thinkers like John von Neumann and Oskar Morgenstern, in their 1944 book Theory of Games and Economic Behavior, laid the groundwork for what's known as expected utility theory. The concept is simple but powerful: you evaluate a decision by looking at the probability of each outcome and multiplying it by its value (or "utility"). You can get a deeper look into this in the historical context of decision theory.

Believe it or not, this created a direct line from high-level economic theory to the prioritization headaches product teams deal with every single day. The core idea is the same, whether you're trying to predict market behavior or decide which feature to ship next.

The real evolution isn't the theory itself, but the incredible speed and scale at which we can now apply it. What used to take experts weeks of manual analysis can now happen in near real-time, thanks to software.

This incredible acceleration is what makes modern frameworks so different from their ancestors. The challenge for SaaS teams isn't just about making the single "right" choice; it's about making thousands of small, data-informed choices, quickly and consistently.

The Modern Leap: Data and Automation

For decades, putting these theories into practice meant wrestling with spreadsheets, doing manual calculations, and relying heavily on human guesswork. A product manager might try to score different features, but the "Impact" or "Confidence" scores were still just educated guesses. The whole process was slow, clunky, and completely disconnected from what customers were actually doing.

Today, technology has become the engine that supercharges these classic principles. This is where the real transformation has happened. Modern platforms are the next logical step in this evolution, taking the core logic of decision theory and automating it with live data.

Instead of just guessing a feature's potential impact, these systems can actually quantify it. They do this by plugging directly into the sources of customer feedback and behavior:

- Support Tickets: They can analyze patterns in platforms like Zendesk or Intercom to spot the bugs or feature gaps causing the most pain.

- Usage Metrics: They track how users engage with the product to see which features drive value and which ones lead to churn.

- Sales Conversations: They can pull insights from sales calls to identify the features that are preventing big deals from closing.

This is the real power of a modern decision-making framework. It’s not a static, theoretical exercise anymore. It’s a living, breathing system that constantly pulls in new data to give you a clear, quantifiable picture of what truly matters.

For example, a platform like SigOS puts these principles to work by automatically assigning a dollar value to bugs and feature requests, turning abstract user feedback into concrete revenue impact. This lets product teams shift from slow, manual analysis to getting instant, data-driven insights—a critical skill we explore more in our guide to self-serve analytics for product teams.

Comparing Popular Product Prioritization Frameworks

Picking the right decision-making framework can feel like trying to choose just one tool for a massive workshop—it’s a bit overwhelming. Let's cut through the noise and look at the most popular options that top SaaS product teams actually use. Think of this as your field guide for turning a chaotic backlog into a strategic, high-impact roadmap.

We're going to break down four heavyweights: RICE, ICE, Weighted Scoring, and Cost of Delay. Each one has its own personality. RICE is like a carefully balanced investment portfolio, weighing different factors for steady, long-term growth. ICE, on the other hand, is the quick gut-check, perfect for scrappy teams that need to place smart bets without getting bogged down in analysis.

The RICE Framework: A Calculated Approach to Opportunity

Developed by the team at Intercom, the RICE scoring model was designed to inject a healthy dose of objectivity into prioritization. It’s not about guesswork; it forces your team to think critically about why an initiative matters by breaking it down into four distinct factors.

The formula itself is pretty straightforward: (Reach x Impact x Confidence) / Effort.

- Reach: How many people will this feature actually touch in a given timeframe? (e.g., 500 customers per month)

- Impact: How much will this move the needle on a key goal, like adoption or conversion? This is often scored on a simple scale: 3 for massive impact, 2 for high, 1 for medium, and 0.5 for low.

- Confidence: How sure are you about your other estimates? This is a crucial gut-check, expressed as a percentage: 100% for high confidence, 80% for medium, and 50% for a low-confidence shot in the dark.

- Effort: What’s the total time investment from your product, design, and engineering teams? This is usually measured in "person-months."

By putting a number on these elements, RICE helps you compare wildly different ideas—like a tiny UI tweak versus a major new integration—on an even playing field. It’s an fantastic framework for more established products with a solid user base and good access to real data.

The ICE Framework: Prioritizing for Speed and Momentum

The ICE scoring model is a simpler, faster alternative to RICE. It’s a favorite among early-stage startups and teams that need to move fast without getting stuck in "analysis paralysis." It boils the decision down to just three core variables.

The formula is even simpler: Impact x Confidence x Ease.

Each factor is typically rated on a quick 1 to 10 scale. The goal isn't scientific precision; it's about forcing a structured conversation to quickly surface the most promising ideas. ICE really shines when you have limited data and need to lean on informed intuition to guide your next move.

The real magic of ICE is its speed. It gives you a "good enough" prioritization in minutes, not days, making it perfect for agile teams that value quick iteration and learning over exhaustive upfront planning.

Weighted Scoring: Custom-Fit to Your Strategic Goals

So, what happens when your company's #1 priority is reducing churn, but the standard frameworks don't really account for it? That’s exactly where Weighted Scoring comes in. This is a highly adaptable decision-making framework that lets you define your own criteria and assign weights based on what matters most to your business right now.

For instance, your team could create a model with criteria like this:

- Reduces Churn Risk (Weight: 40%)

- Drives New User Acquisition (Weight: 30%)

- Improves User Satisfaction (NPS) (Weight: 20%)

- Aligns with Q3 Strategy (Weight: 10%)

Each potential feature is then scored (say, from 1-100) against each criterion, and a final weighted score is calculated. This approach ensures your product roadmap is a direct reflection of your most important business objectives, whatever they may be.

Cost of Delay: The Economic Viewpoint

Cost of Delay (CoD) flips the whole script. Instead of asking, "How valuable is this?" it asks a much more urgent question: "How much money are we losing for every week we don't ship this feature?" This framework forces a powerful, economic perspective on everything you do.

To figure it out, you estimate the value a feature will deliver over a period (like weekly revenue) and divide it by the time it will take to build. The resulting score helps you prioritize the work that will deliver the most value in the shortest amount of time. CoD is incredibly potent for teams that can directly link features to revenue, like in e-commerce or subscription SaaS.

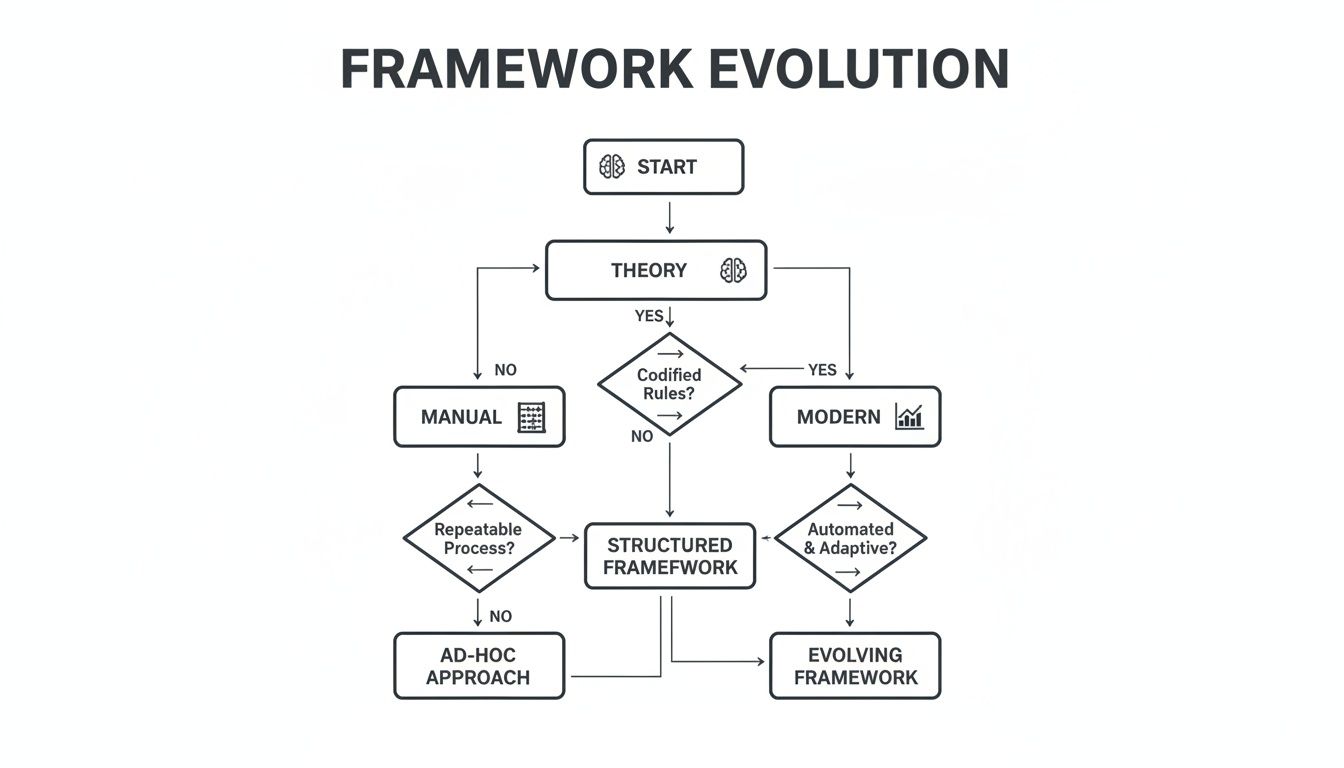

This flowchart shows how these kinds of frameworks have grown from abstract ideas into the sophisticated, data-driven tools we rely on today.

As you can see, the trend is clearly moving toward models that are more structured and repeatable, helping us reduce our reliance on pure guesswork.

To help you sort through these options, here's a quick side-by-side comparison.

Choosing Your Product Prioritization Framework

A side-by-side comparison of the most common decision-making frameworks to help SaaS teams select the best model for their needs.

| Framework | Best For | Key Pro | Key Con |

|---|---|---|---|

| RICE | Mature products with lots of data and diverse ideas. | Balances multiple factors objectively. | Can be slow if data is hard to get. |

| ICE | Startups and agile teams needing to move very quickly. | Extremely fast and simple to implement. | Can be overly subjective; relies on intuition. |

| Weighted Scoring | Teams with very specific, unique strategic goals. | Highly customizable to business priorities. | Requires upfront work to define criteria and weights. |

| Cost of Delay | Revenue-focused teams where time-to-market is critical. | Puts an economic lens on all decisions. | Can be difficult to calculate for non-revenue features. |

Ultimately, there's no single "best" framework. A data-rich scale-up will get huge value from RICE, while a nimble startup might thrive with the speed of ICE. For a deeper dive into even more models, check out A Practical Guide to Product Management Frameworks for additional context.

The real key is to pick a system that brings clarity, not more complexity, to your team’s decision-making process.

Powering Your Framework with Revenue and Behavior Data

A decision-making framework, whether it's RICE or a custom Weighted Scoring model, is only as good as the information you feed it. This is the single biggest point of failure for most product teams. They adopt a structured system but continue to fill it with subjective, gut-feel inputs for critical variables like 'Impact' or 'Confidence.'

When you rely on guesswork, you haven't really solved the problem—you've just given it a fancy new name. The framework becomes a theoretical exercise. It's a clean-looking spreadsheet that still reflects internal politics and personal opinions rather than what the market actually needs. You’re still just guessing what will drive growth.

The real transformation happens when you move beyond these subjective inputs and connect your framework directly to objective, quantifiable data. This is where you turn a simple scoring system into a powerful engine for business growth, one that runs on real customer behavior and hard revenue signals.

Beyond Subjectivity: Connecting to Real Signals

Instead of a product manager guessing an 'Impact' score of "high," imagine a system that automatically assigns a score based on tangible data. This means plugging your decision-making framework into the tools your business already uses every day to talk to customers.

The goal is to find the hidden patterns in your operational data that correlate with business outcomes you care about—like customer churn, account expansion, or new sales.

- Support Tickets: Look at platforms like Zendesk or Intercom to spot recurring bugs or feature requests coming from your highest-value customers. A feature requested by ten enterprise accounts at risk of churn should carry much more weight than one requested by a hundred free-tier users.

- Sales Calls: Sift through call transcripts to pinpoint the feature gaps that consistently come up as deal-breakers for prospects. If losing a $100,000 deal is a common story because of a missing integration, that information needs to go straight into your prioritization.

- Usage Metrics: Track in-app behavior. See which features are used by customers who upgrade their plans versus those who eventually churn. This creates a direct, undeniable link between product engagement and revenue.

By connecting these data streams, you build a system that reflects what will actually make or save your company money. Your framework stops being a static document and becomes a dynamic tool that automatically surfaces the highest-leverage opportunities.

Turning Data into Actionable Insight

The shift toward data-driven and AI-infused decision-making has picked up serious speed in recent years. It allows SaaS teams to process huge volumes of feedback with incredible precision. A 2023 Gartner report noted that 85% of enterprises use AI for decisions, boosting outcomes by 20-30% with real-time analytics.

This is especially vital in SaaS. Tools like SigOS, for example, show that usage metrics can predict revenue with 87% accuracy. For product teams, this means you can ingest Zendesk tickets and Jira issues to score feature requests by churn risk. This helps cut down on the massive development waste—a Forrester study found SaaS firms lose a staggering $1.2 trillion yearly to poor prioritization.

A feature request that consistently appears in support tickets from enterprise customers who later churn should automatically receive a high 'Impact' score. This is how you shift from reactive firefighting to proactive, revenue-driven development.

Powering your framework with this kind of data requires more than just collecting it, of course. You have to make sense of it. Learning how to turn big data into clear dashboards that drive decisions is crucial for making choices that your whole team can understand and get behind.

The Role of an Intelligence Platform

Let's be realistic: manually connecting all these dots is a massive job that few teams have the time or resources for. This is where an AI-driven intelligence platform like SigOS becomes so valuable. It acts as the central nervous system for your decision-making framework, doing the heavy lifting of data ingestion, analysis, and correlation for you.

Instead of spending weeks trying to piece together disparate reports, you get a unified view that quantifies the dollar value of every bug and feature request. You can also learn more about how to use behavior analytics to improve your product strategy in our dedicated guide.

A system like this works 24/7 to find the signal in the noise, alerting you to emerging patterns that predict churn risk or identify high-value opportunities. By automating the data-gathering part of the process, you free your team to focus on what they do best: building a great product that solves real customer problems and drives sustainable growth. And that, after all, is the ultimate goal of any effective decision-making framework.

How to Build and Implement Your Own Framework

Knowing about different models is one thing, but putting a custom decision-making framework into practice is where you really start to see the results. Building your own isn’t about creating some complex, rigid system overnight. It’s about starting with a simple, practical structure that actually fits your team's needs and grows with you.

The goal is progress, not perfection. Think of it less like building a skyscraper and more like planting a garden. You start with good soil (your objectives), plant a few strong seeds (your initial framework), and then you tend to it over time, adapting as you go.

Here’s a clear, five-step process to create a living system that becomes the undisputed source of truth for your team's prioritization.

Step 1: Define Your Core Business Objectives

Before you even think about formulas or scores, you have to anchor your framework to what your business is actually trying to achieve. A framework without clear goals is just a process for the sake of process. You need to get specific and make your objectives measurable.

Are you trying to grow your user base, keep the customers you have, or move upmarket? Your answer will completely change the criteria you use to evaluate ideas.

- Example Objective 1: Reduce monthly customer churn by 15% within the next six months.

- Example Objective 2: Increase the free-to-paid conversion rate from 4% to 6% by the end of Q4.

- Example Objective 3: Boost enterprise user adoption of our new analytics module by 25%.

These objectives become the North Star for everything you do. Every decision should be a clear step toward one of them.

Step 2: Select a Starting Framework

Now that you know what you’re aiming for, there's no need to reinvent the wheel. Just pick one of the established frameworks we talked about earlier as your starting point. The trick is to choose one that naturally lines up with your team’s maturity and strategic goals.

If your main goal is slashing churn, a Weighted Scoring model is perfect because you can give a heavy weight to "Churn Reduction Impact." If you're an early-stage team that just needs to move fast, the beautiful simplicity of ICE might be your best bet.

Your first framework is just a template. You aren't locked into it forever. The goal is to get something functional up and running quickly so you can start learning and making it better.

Step 3: Identify and Connect Your Data Signals

This is the most critical step for moving beyond guesswork. A truly powerful decision-making framework runs on objective data, not just the loudest opinions in the room. You need to identify where the most valuable customer and business signals live inside your organization.

Common data sources include:

- Customer Support Systems: Tools like Zendesk or Intercom are absolute goldmines for understanding customer pain points.

- CRM and Sales Platforms: Your data in Salesforce or HubSpot can show you which feature gaps are blocking high-value deals.

- Product Analytics Tools: Usage data tells you what customers are actually doing in your product, highlighting friction or new opportunities.

- Internal Tools: Don’t forget about Jira or Linear, which track bug reports and internal requests.

Connecting these signals is what allows you to automatically quantify the 'Impact' of a feature request. For a deeper dive, our guide on creating a feature prioritization matrix can help add more structure.

Step 4: Calibrate Your Scoring Model

Once you have data flowing in, you need to make sure the output actually makes sense. The first scores your new framework spits out will almost certainly need some adjustment. This calibration process is all about ensuring the final priority list reflects reality.

Take a few initiatives you already understand well and run them through your new model. Does a critical bug fix that’s hammering your top ten customers get a high score? Does that minor UI tweak requested by a single user score appropriately low?

If the scores feel off, it’s a sign your weights or criteria need adjusting. This step is about fine-tuning the model until it consistently produces a priority list your team can genuinely trust and get behind.

Step 5: Establish a Routine for Review and Iteration

Your framework shouldn't be a static document that gathers dust on a shelf. It has to be a living system that adapts as your business goals and the market inevitably change. Set up a regular cadence—monthly or quarterly—to review and refine the whole process.

During these reviews, ask the tough questions:

- Are our core objectives still the right ones?

- Is our scoring model accurately predicting the impact of our work?

- Are there new data signals we should be plugging in?

- What's the team's feedback on using the framework day-to-day?

This continuous feedback loop is what transforms a good decision-making framework into a great one. It ensures your system remains a powerful tool that creates alignment and drives meaningful results across the entire company.

Common Questions About Decision-Making Frameworks

Let's be real. Even the most elegant decision-making framework can hit a wall of skepticism and practical hurdles when you try to roll it out. Adopting a new process isn't just about tweaking spreadsheets and scores; it's about changing deeply ingrained habits and getting everyone on the same page.

Moving from gut-feel planning to a more structured system is a big cultural shift. So, it's completely normal for your team and stakeholders to have a few questions. This section tackles the most common concerns head-on, giving you clear answers to navigate the real-world challenges of building a smarter, more objective product culture.

How Do We Get Stakeholder Buy-In?

Getting executives and other department heads on board is often the first—and biggest—challenge. They’re used to having a direct line to influence the roadmap, and a new framework can easily feel like you're taking away their control. The trick is to frame it not as a barrier, but as a tool for achieving their goals faster and more predictably.

Instead of presenting the framework as a rigid set of rules, show them how it brings clarity and objectivity to the usual chaos. Walk them through how it connects the team's day-to-day work directly to the business objectives they care about, whether that's reducing churn, boosting revenue, or entering a new market.

"Getting to the ‘right answer’ without anybody supporting it or having to execute it is just a recipe for failure. The quality of the decision is only one part of the equation; implementation is the other."

Here are a few ways to build that trust:

- Run a Pilot Program: Test the framework on a small, contained set of decisions first. This is a low-risk way to showcase its value without disrupting the entire organization.

- Involve Them in the Process: When you're building a weighted scoring model, ask key stakeholders for their input on the criteria and their weights. Giving them a hand in its creation gives them a real sense of ownership.

- Focus on the Wins: Be relentless about communicating how the framework is helping the team make smarter decisions that deliver measurable results. Connect the dots for them.

What if a Decision Is Time-Sensitive?

"This will just slow us down!" is a common fear, especially when a critical bug pops up or a sudden market opportunity appears. But a good framework should be flexible enough to handle emergencies without being tossed aside. The solution isn't to bypass the system; it's to adapt it for speed.

For these high-urgency situations, you can create an "expedited" path. This could be as simple as using a quick model like ICE for a gut check or having a pre-defined "emergency" flag that automatically elevates an item's priority. The framework’s job is to provide clarity, not bureaucracy. For example, an unexpected issue threatening your largest customers would naturally score off the charts on 'Impact' and 'Urgency,' justifying an immediate response within the system.

How Do We Handle Subjectivity?

Look, no framework is going to completely eliminate human judgment, and that's a good thing. The goal is to reduce our reliance on pure guesswork and make our assumptions transparent, not to pretend that experience and intuition don't matter. Variables like 'Confidence' in the RICE model are there for a reason—they're designed to capture the team's collective gut feeling in a structured way.

The real power of a good framework is that it makes subjectivity visible and consistent. By forcing the team to put a number on something like "confidence," you create a starting point for a healthy debate and a way to challenge each other's assumptions constructively.

Here’s how you can manage those subjective inputs:

- Define a Clear Scale: Don't leave scores open to interpretation. Create clear definitions. For 'Impact,' a '3' might mean "affects all users," while a '1' means "affects a niche segment."

- Gather Multiple Perspectives: Don't let one person score everything. Pull in input from engineering, design, marketing, and customer success to get a more balanced and realistic view.

- Calibrate and Review: Look back at past decisions. Was the team's 'Impact' score accurate in hindsight? A study on team decision-making found that 60% of executives feel bad decisions are made just as often as good ones. Regular calibration is the only way to get better over time.

By embracing these practices, you can ensure your framework remains a practical and trusted tool for navigating the messy reality of building great products.

Ready to power your decision-making framework with real-time revenue and behavior data? SigOS ingests customer feedback from support tickets, sales calls, and usage metrics to automatically quantify the dollar value of every feature request and bug. Stop guessing and start prioritizing with confidence.

Ready to find your hidden revenue leaks?

Start analyzing your customer feedback and discover insights that drive revenue.

Start Free Trial →